Artifical Intelligence

Recent Challenges in Artificial Intelligence – Adversarial Attacks

September 19, 2019In Brief

- Computer science in Artificial Intelligence is a recent trend in machine learning and it is a major leap forward in terms of technology

- In spite of novel effective algorithms, IDS models are ineffective against adversarial attacks.

- There is a need of effective IDS models that can combat such attacks and will the major objective of futuristic IDS models.

Adversarial attacks are deliberate inputs to a model designed by attackers to make mistakes (Wang, Li, Kuang, Tan, & Li, 2019). In image data, the attacker modifies the images slightly in such a way that it seems good in a visual sense, but the model recognizes it as some other object. This is similar to creating an optical illusion for the models(Chen et al., 2018). This attack mostly works on image datasets and not on textual dataset.

For more details on selection of dataset, seeAdversarial attacks are difficult to repel and hence it is difficult to provide security against them. Security against adversarial attacks is still in the budding stage and has a great scope of research in the future. Intrusion Detection Systems(IDS) are very much a necessity for fighting these attacks.

See –However, modern IDS are still ineffective against adversarial attacks leading to a major security hole in the system. These defects must be addressed as soon as possible for more effective security. Even though Deep Learning branch of neural networks are seen to be a revolutionary technology with a higher accuracy, it still fails while classifying the adversarial attacks (Marra, Gragnaniello, & Verdoliva, 2018).

See –

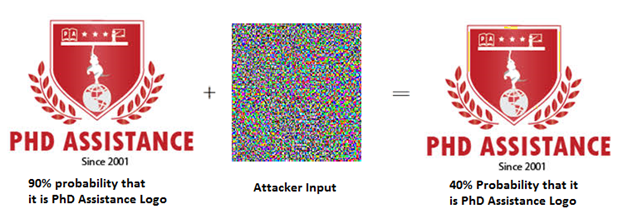

Let us see an example of adversarial attack as seen in figure 1. The trained model is 90% sure that the displayed image is a PhD Assistance, however the attacker tampers with the trained model by introducing new inputs so that the pixel values get altered slightly. After the attack, even though the image looks similar to the human eye, the image detection model reads the images wrongly and classifies it as some other object. The probability of linking it to the logo is reduced and will now have more probability of linking it to some other object.

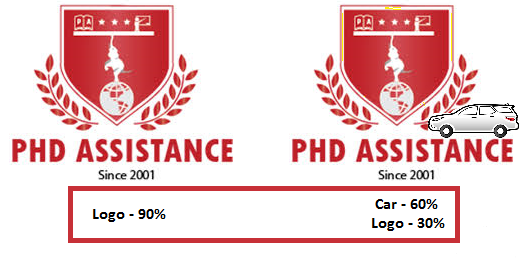

These attacks are so dangerous that even when the modified images are printed out and scanned, they still fool the classifier into believing it as something else. Figure 2 shows another form of adversarial attack. It contains a logo, where the model may classify it as such with 90% probability. When another small object is overlaid on it, the probability of accurate detection changes and may even consider it as something that is completely different. In the figure, a small image of a car is overlaid on the image and hence the model predicts the object to be a car with a higher probability. This is dangerous since wrong image detection leads to some unprecedented incidents.

In real time, this could be dangerous since an attacker may fool automated systems into believing in some other processes (Iglesias, Milosevic, & Zseby, 2019). Eg. Self driven cars may read the road signs wrongly resulting in unprecedented mishaps. This error may take place either before the training phase or after the training phase. When it takes place before the training phase, the attacker targets the training data and hence the model gets trained wrongly. On the other hand, when it takes place after the training phase, the attacker targets the test data so that the classification is performed wrongly. It has been seen that the most widely used approaches are vulnerable to these attacks resulting in reduced performance of the model. The modifications occur with respect to perturbations such that they are linearly proportional to the modified pixel values (Ozdag, 2018). It is seen that newer machine learning techniques can be compromised in unprecedented ways. Even simple inputs may break the techniques leading to abnormal behaviour of the algorithms.

It is difficult to create models theoretically for crafting the adversarial processes. Since there are no available models for optimising this problem, it makes detection of these kinds of attack very difficult. The machine learning approaches must create very good outputs and must perform very well for efficient detection of adversarial attacks. Most of the available techniques are hence not very effective in predicting the adversarial attacks even though they are very efficient in detecting ordinary attacks. Hence, creating an effective defence approach is very much a necessity for the future. More adaptive techniques will be the major objective of future Intrusion Detection Systems.

- Chen, S., Xue, M., Fan, L., Hao, S., Xu, L., Zhu, H., & Li, B. (2018). Automated poisoning attacks and defenses in malware detection systems: An adversarial machine learning approach. Computers & Security, 73, 326–344. https://doi.org/10.1016/j.cose.2017.11.007

- Iglesias, F., Milosevic, J., & Zseby, T. (2019). Fuzzy classification boundaries against adversarial network attacks. Fuzzy Sets and Systems, 368, 20–35. https://doi.org/10.1016/j.fss.2018.11.004

- Marra, Gragnaniello, & Verdoliva. (2018). On the vulnerability of deep learning to adversarial attacks for camera model identification. Signal Processing: Image Communication, 65, 240–248. https://doi.org/10.1016/j.image.2018.04.007

- Ozdag, M. (2018). Adversarial Attacks and Defenses Against Deep Neural Networks: A Survey. Procedia Computer Science, 140, 152–161. https://doi.org/10.1016/j.procs.2018.10.315

- Wang, X., Li, J., Kuang, X., Tan, Y., & Li, J. (2019). The security of machine learning in an adversarial setting: A survey. Journal of Parallel and Distributed Computing, 130, 12–23. https://doi.org/10.1016/j.jpdc.2019.03.003

Related Topics Engineering and Technology

Related Services

- Guidelines to Write a Research Proposal for Neurology Research Scholars - March 19, 2021

- How to Choose a PhD Dissertation Topic For Economic Research? List out the Criteria for Topic Selection - March 11, 2021

- Beginners Guide to Write a Research Proposal for a PhD in Computer Science - February 19, 2021